Dell initiated a comprehensive accessibility audit on over 600 webpages to ensure adherence to the WCAG 2.1 Level A and AA standards. By implementing the audit's detailed recommendations, Dell aimed to not only enhance its web accessibility but also to leverage these improvements in broader strategic and operational contexts.

In my role as the project lead, I was responsible for several key contributions:

1. Developed Testing and Reporting Frameworks

2. Mentored Team Members

3. Accessibility Testing & Reporting

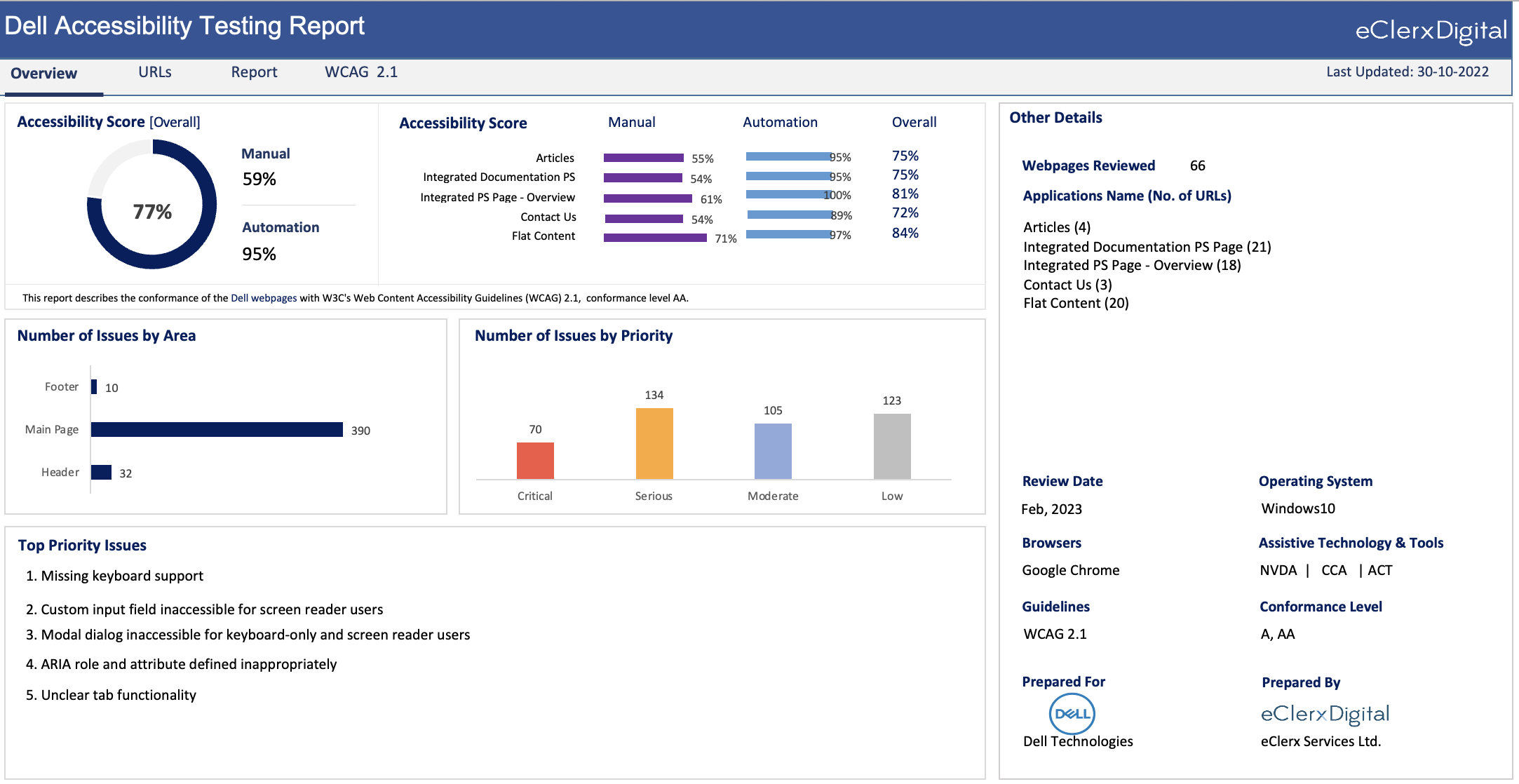

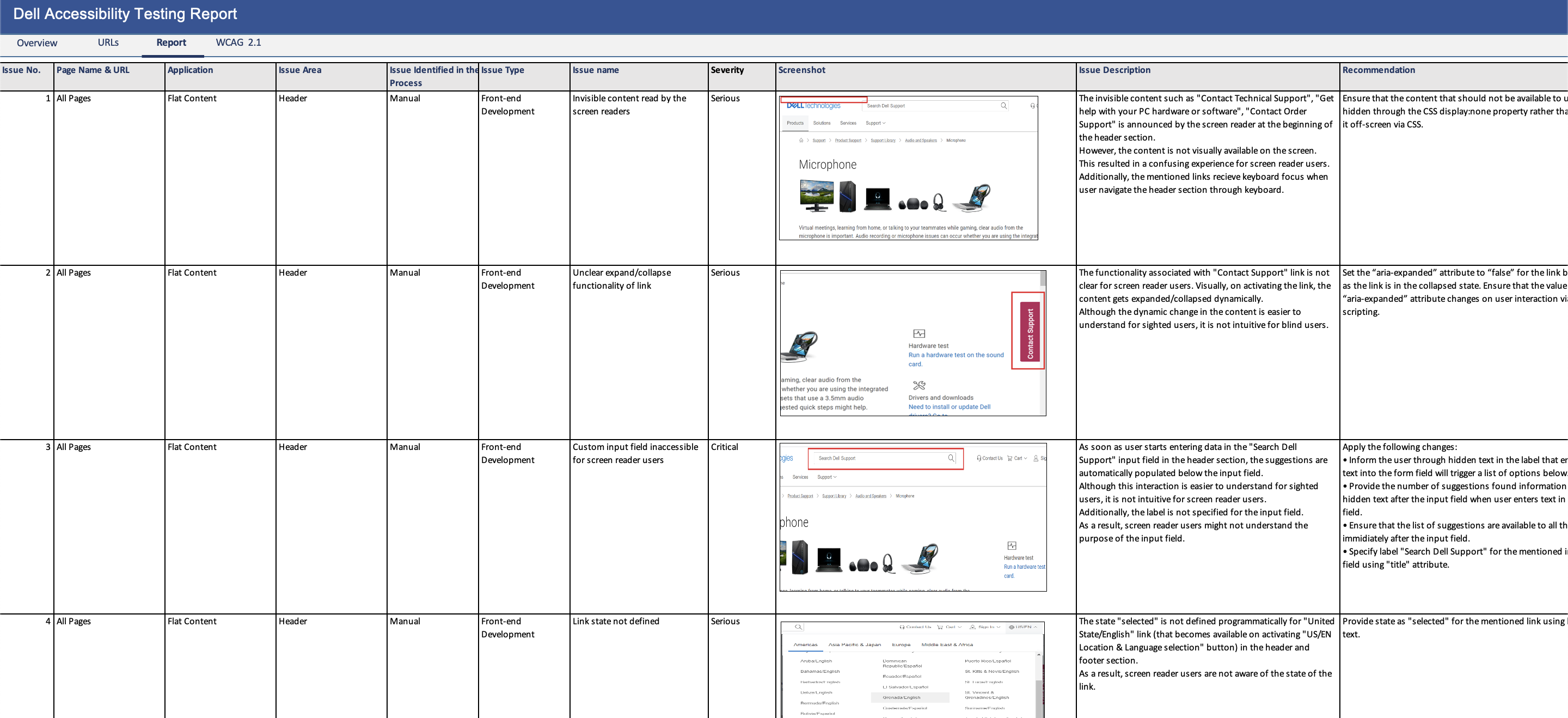

This report was one of several provided to Dell, focusing on a specific set of URLs that were rigorously tested against the WCAG guidelines. It detailed the number of accessibility issues found, categorized by severity, to help Dell prioritize which problems required immediate resolution. By highlighting critical areas, the report served as a roadmap for Dell to enhance their web accessibility, ensuring compliance and improving user experience.

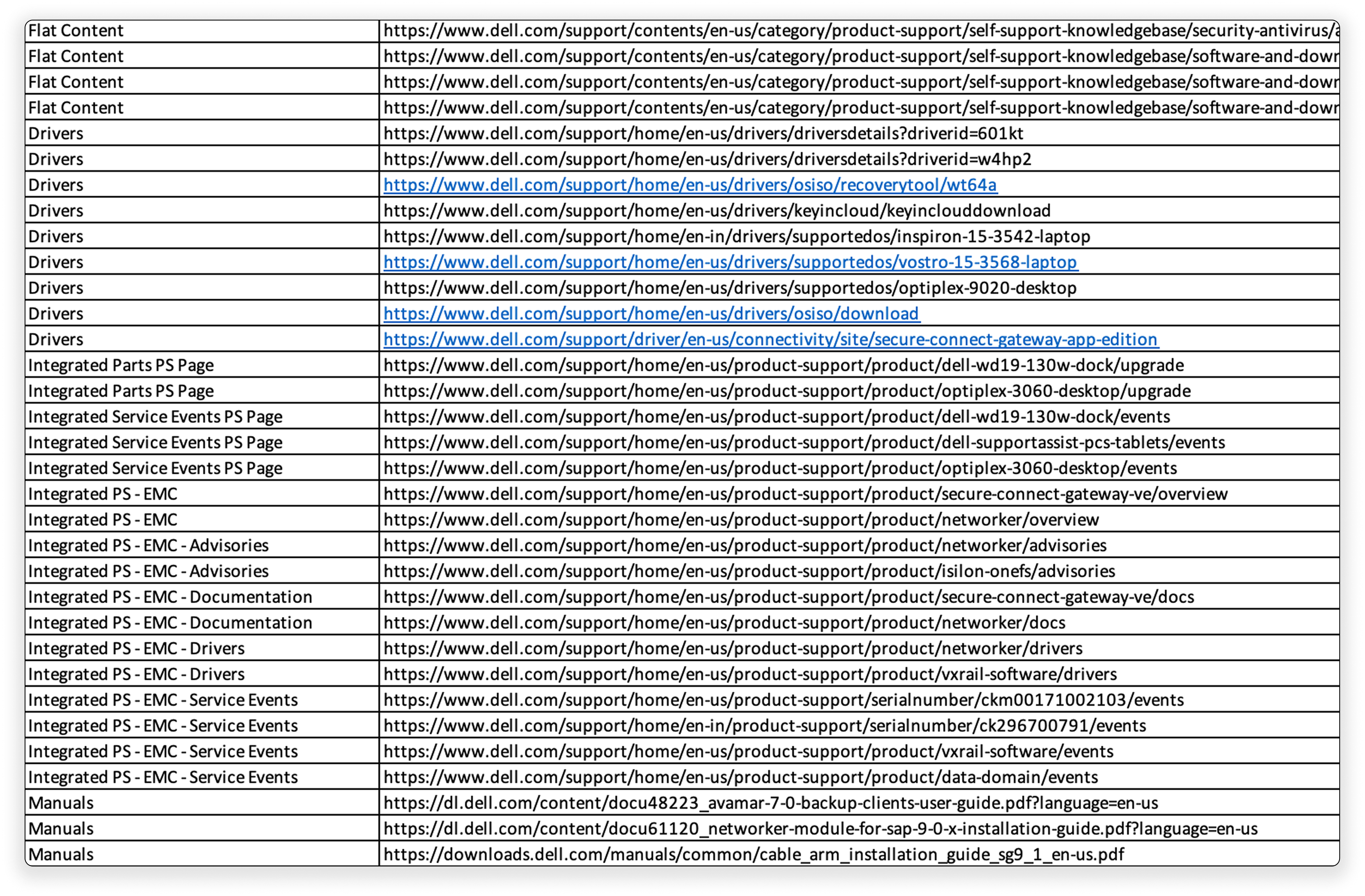

During the planning phase of our web accessibility audit, we categorized URLs based on their structural similarities. This strategic grouping allowed us to efficiently test and report on similar pages, significantly reducing the time required for each audit cycle. By focusing on these similarities, we could apply findings and solutions across multiple pages more effectively, enhancing the overall speed and impact of our accessibility improvements.

.png)

Leveraged automated testing tools to quickly scan large numbers of pages for common accessibility issues.

Conducted in-depth, hands-on evaluations to catch accessibility issues that automated tools might’ve overlooked

Reporting all issues in excel using predefined templates

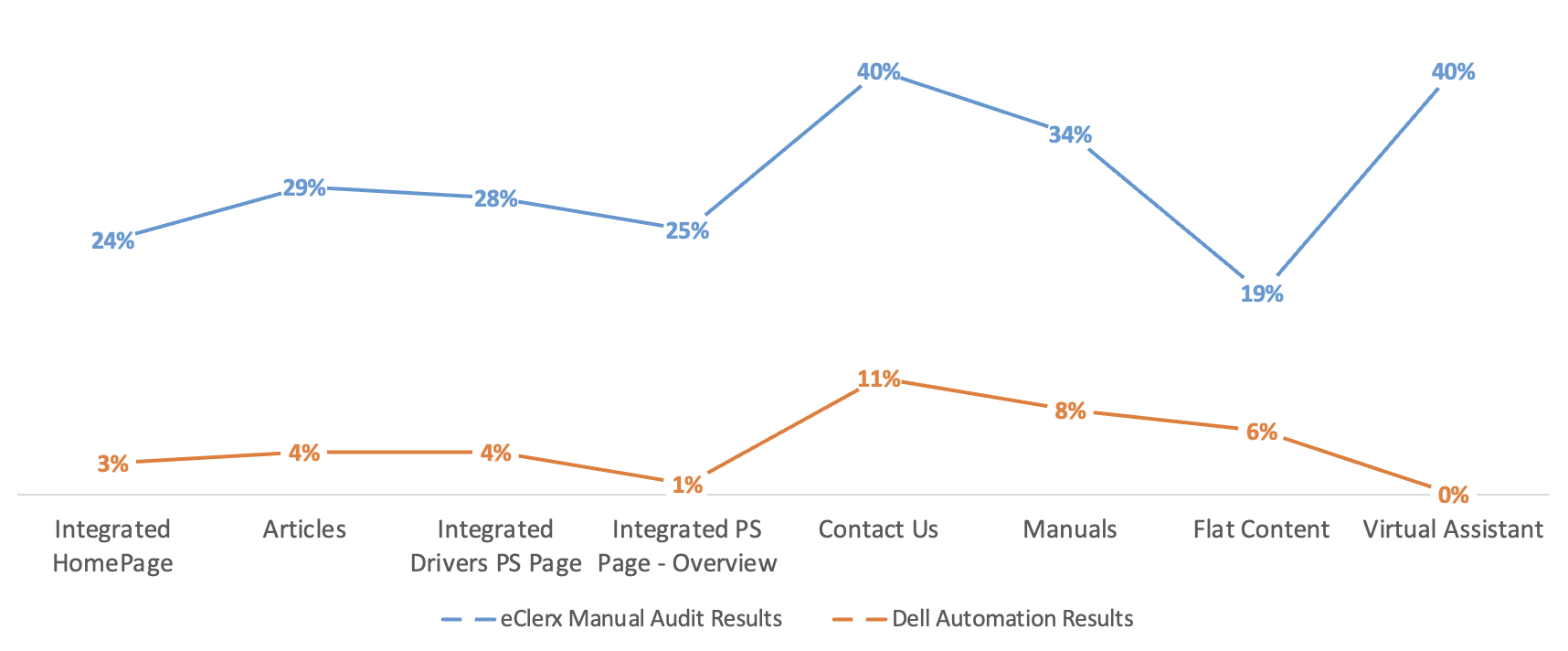

However, automated testing only covered a fraction of the guidelines, necessitating a deeper dive into the audit. We manually reviewed each of the 600 webpages to provide comprehensive and actionable insights.

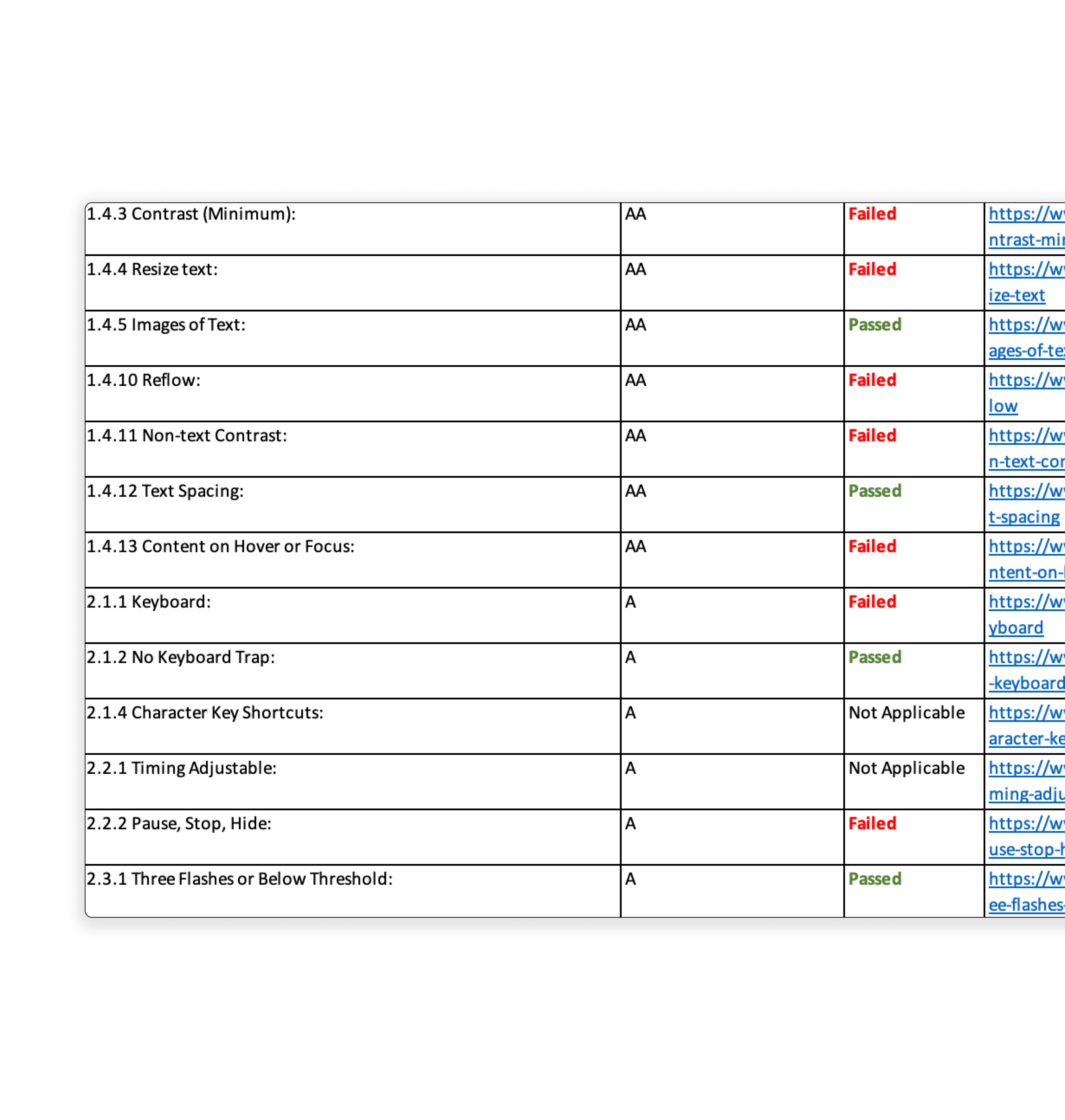

During the manual testing phase, we employed a range of techniques including keyboard testing and voice assistant tools to thoroughly evaluate the URLs against the A and AA WCAG guidelines. Each identified issue was comprehensively documented in an Excel sheet. This documentation included several crucial details:

This structured documentation process was crucial for creating an actionable roadmap towards achieving full web accessibility.

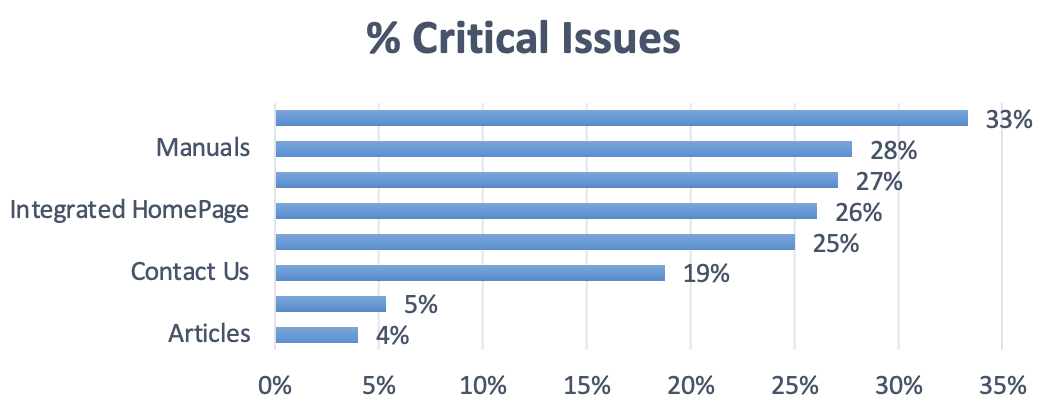

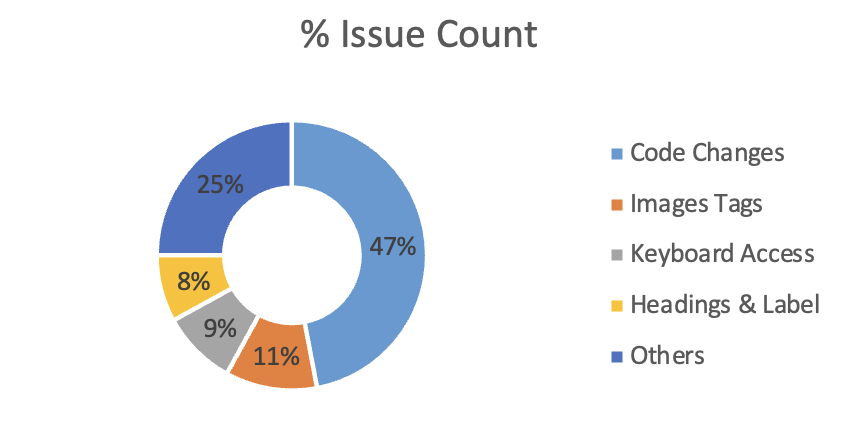

In the reporting phase of the project, we consolidated the findings from all categories of URLs to provide a high-level overview of the accessibility issues present. This comprehensive reporting was essential for understanding the intensity and distribution of the issues across different webpages. To enhance clarity and immediacy of the data, we utilized Power BI to visualize the insights. This allowed the team to quickly grasp which areas required immediate attention and facilitated a more informed decision-making process regarding prioritization and resource allocation for remediation efforts.